Author: Daniel E Latumaerissa, Azhari Azhari, Yunita Sari

Problem and Challenge

Despite major progress in machine translation (MT), low-resource languages remain underserved due to a lack of digital resources such as parallel corpora and linguistic tools. To

address this gap, understanding research trends in the low-resource MT (LoResMT) domain is essential. Trend analysis—employing techniques like bibliometrics, keyword networks, and machine learning clustering—offers valuable insights into the evolution and direction of research. Graph-based modeling is particularly effective for uncovering structural elationships in such analyses, though interpreting complex networks remains a challenge.

While large language models (LLMs) have shown promise in graph-related tasks, their potential for deeper applications like graph understanding and question answering is still

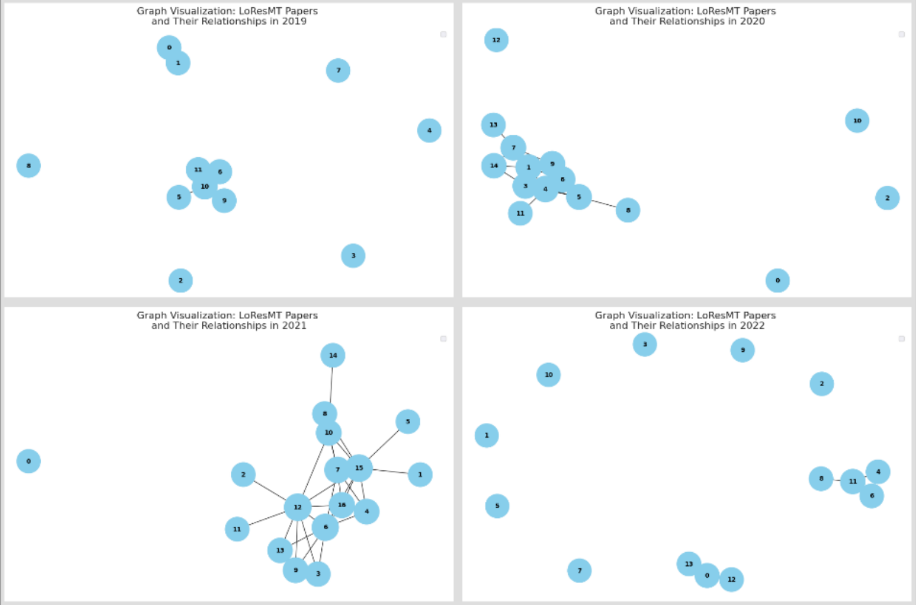

underexplored. This study proposes an innovative graph-based method to analyze research connections in LoResMT workshops (2019–2024), constructing yearly graphs using Sentence-BERT and Word2Vec embeddings, with edges formed via cosine similarity, to reveal dynamic trends and inter-paper relationships.

Experimentation Goal

The goal of this experimentation is to explore and validate the effectiveness of graph-based modeling combined with semantic embeddings to uncover latent research patterns in the Low-Resource Machine Translation (LoResMT) workshop series from 2019 to 2024. By generating paper-level embeddings using Sentence-BERT and Word2Vec, and constructing yearly graphs based on cosine similarity between nodes, this study aims to identify evolving research themes, emerging clusters, and interconnections across publications. Additionally, the experiment seeks to evaluate the suitability of large language models (LLMs) in interpreting and reasoning over graph structures for tasks such as cluster identification,

graph-based querying, and dynamic trend tracking, thereby contributing a novel methodology for research trend analysis in low-resource NLP domains.

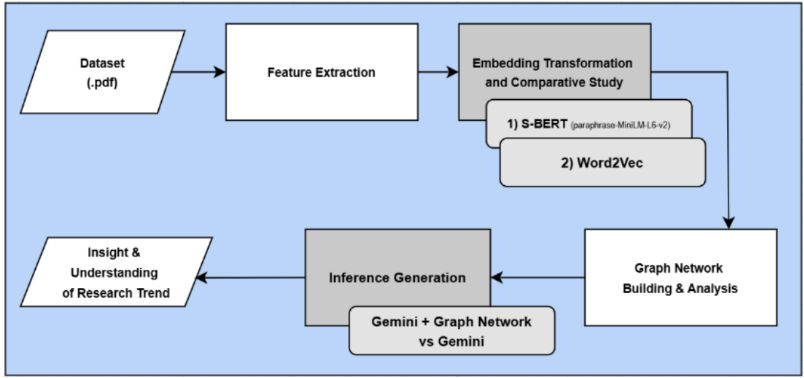

Method

In general, this research consists of 6 important steps, dataset collection and input, feature extraction, embedding transformation, graph network construction, inference generation and the output insight and understanding of research trend.

Result and Discussion

The experimental comparison between S-BERT and Word2Vec embeddings reveals the clear superiority of S-BERT in producing stable and semantically consistent representations. The Shapiro-Wilk normality test shows that S-BERT embeddings exhibit a normal distribution (p= 0.255), indicating consistent similarity patterns across the dataset. In contrast, Word2Vec narrowly misses normality (p ≈ 0.055), suggesting instability and potential semantic inconsistency, especially in small or domain-specific datasets. This aligns with prior findings that Word2Vec, while effective on large corpora, tends to produce biased or variable results

with limited data. Furthermore, Welch’s t-test confirms a statistically significant difference (p≈ 3.66×10−5) between the cosine similarity scores generated by the two models, further

highlighting S-BERT’s robustness. Notably, variations in aggregation methods (mean vs weighted mean) did not yield significant differences, underscoring that model selection—

rather than aggregation strategy—has a more profound impact on graph structure and semantic reliability.

The experimental results show that integrating graph network data into the Gemini language model prompt significantly enhances the quality and specificity of generated inferences.

Compared to the Gemini-only setup, the Gemini + Graph Network approach produces more detailed, structured, and trend-sensitive outputs—such as identifying specific clusters of related papers and highlighting technical strategies like back-translation, sentence-level fine-tuning, and domain adaptation. This method enables the model to extract deeper insights by leveraging inter-paper connections, offering a richer understanding of research directions in low-resource machine translation. However, this added depth comes at the cost of simplicity; while Gemini-only inferences are more concise and accessible for general audiences, the graph-enhanced responses offer higher analytical value suited for expert interpretation and decision-making in the field.

Value Proposition

This research introduces an innovative approach that combines semantic embeddings and graph-based modeling to enhance trend analysis in low-resource machine translation. By

integrating graph-derived insights into large language models, it produces more specific and informative inferences. The framework offers a scalable, data-driven tool for understanding research developments, supporting informed decisions in advancing NLP for underrepresented languages.